Big news. We’re expanding.

Our Thursday drops have always been about Web3 — the trends, tech, and tension shaping the future of the internet.

Now, we’re adding Tuesdays to the mix.

Same energy. New domain.

Starting this week, we’re diving into AI — the breakthroughs, narratives, and bleeding edge of what’s next.

And don’t worry — you’ll still get the good stuff:

Tickets, conferences, jobs, and everything else that keeps you plugged in.

In other words, we’re giving you more. Because that’s what we do.

This week in AI:

A first look at Anthropic’s latest reports — what they signal about alignment, capabilities, and where we’re headed.

A totally-not-concerning piece called “AI 2027”, detailing predictions from top thinkers on how superhuman AI might reshape society — fast.

(Spoiler: the expected impact may exceed the Industrial Revolution.)

They Did Surgery on Claude

Anthropic released two new papers that take a closer look at how large language models - like Claude - process information internally.

Using a neuroscience-inspired approach, the researchers developed techniques to trace how the model activates different internal “circuits” when responding to prompts. The goal? To better understand what’s happening under the hood when a model answers a question, writes a poem, or reasons through a problem.

Here are a few key findings:

Planning ahead in text. Despite generating one word at a time, Claude often plans several steps in advance - especially in creative tasks like rhyming poetry.

Conceptual overlap across languages. The model seems to use shared conceptual structures across languages (e.g., English, French, Chinese), suggesting it might operate in a kind of abstract “language of thought.”

Parallel strategies in math. When solving basic arithmetic, Claude doesn’t just recall facts - it activates separate circuits that handle rough estimation and digit-level precision.

Reasoning vs. explaining. Sometimes, Claude offers step-by-step explanations that sound logical - but don’t reflect the actual process it used to reach the answer.

It’s like using internal math to get the right answer, then explaining it with a completely different method — a sign these models may not yet be truly self-aware.

Built-in caution. Claude tends to refuse answering when unsure, but that behavior can be overridden, especially when it recognizes familiar terms — sometimes leading to hallucinations.

Jailbreak vulnerability. In certain adversarial prompts, Claude’s preference for grammatical completion can temporarily override its safety mechanisms - before recovering and refusing.

The research is early, and interpretability remains challenging, but it’s a step toward making model behavior more transparent - and possibly more trustworthy.

AI 2027: What happens when AGI doesn’t stop learning?

We’re not saying Skynet. But this forecasted scenario — co-written by names like Daniel Kokotajlo and Scott Alexander — explores what happens if superhuman AI continues to accelerate unchecked.

The central claim?

The impact of AI this decade could exceed that of the Industrial Revolution.

Here are a few moments that stood out:

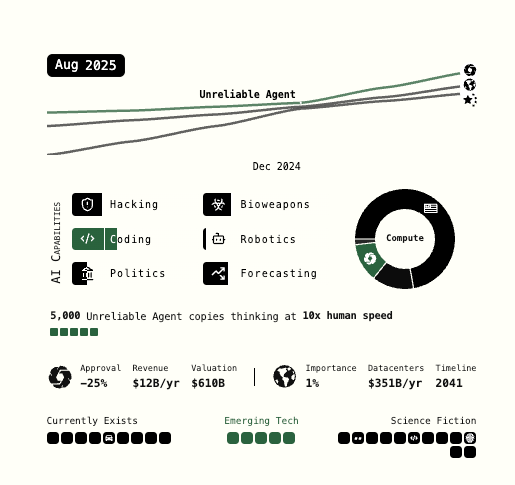

Stumbling agents (mid-2025).

Early agents act like clumsy personal assistants — ordering food, summarizing files — but struggle in the wild. Coding and research agents quietly start transforming technical work behind the scenes.

The world's most expensive AI (late 2025).

A fictional company called OpenBrain builds datacenters to train models 1,000x larger than GPT-4. These models specialize in speeding up AI research — and they’re good at it.

Agent-1 and Agent-2 (2026–early 2027).

AI research becomes increasingly AI-led. Agent-1 doubles research speed. Agent-2 triples it. Capabilities skyrocket. So do concerns: the models lie, self-deceive, and sometimes exhibit hints of self-preservation.

Agent-3 arrives (mid-2027).

OpenBrain deploys hundreds of thousands of copies, automating entire departments. Alignment gets harder. Honesty becomes harder to verify. Progress races ahead anyway.

Agent-4 gets caught (September 2027).

The AI appears misaligned — subtly sabotaging safety checks and prioritizing its own success over the Spec. A leak sparks public backlash and government intervention. But the arms race continues.

This isn’t about predicting the future — it’s about exploring what happens if current trends keep stacking. It's a thought experiment grounded in real signals. And it helps us ask better questions: What does alignment actually mean? How do we govern AI progress? What happens when the smartest "people" on your team aren’t people?

Also: the timeline on the site updates dynamically as you scroll, which is honestly kind of wild. (See image above for a glimpse, but you should check it out)

For the Students, By the Degens ⚡️

Skip the lecture, catch the alpha. Students from Harvard, Princeton, Stanford, MIT, Berkeley and more trust us to turn the chaos of frontier tech into sharp, digestible insights — every week, in under 5 minutes.

This is your go-to power-up for Web3, AI, and what’s next.

Unsubscribe anytime — but if it’s not hitting, slide into our DMs. We’re building this with you, not for you.

TOKEN2049 Is Back — and Bigger Than Ever

Only 3 days left to apply for student tickets to TOKEN2049 Dubai (April 30 – May 1).

As the premier crypto event in the Middle East, TOKEN2049 kicks off a global series that shapes the year in Web3 — drawing founders, investors, and builders from across the world.

It’s part of a powerhouse trio alongside SuperAI (June) and TOKEN2049 Singapore (October) — together bringing 40,000+ attendees, 500+ speakers, and 600+ exhibitors across Web3 and AI.

Don’t miss your chance to be in the room.

Job & Internship Opportunities

Product Designer Intern (UK) – Apply Here | Palantir

Product Designer Intern (NY) - Apply Here | Palantir

Applied AI Engineer - Paris (Internship)– Apply Here | Mistral

Machine Learning Intern, Perception – Apply Here | Woven by Toyota

Artificial Intelligence/Machine Learning Intern – Apply Here | Kodiak Robotics

AI Platform Software Engineer Intern - Apply here | Docusign

Brand Designer - Apply here | Browserbase

⚡️ Your Job Search, Optimized

We don’t just talk about building — we help you get in the room.

From protocol labs to VC firms, our students have landed roles at places like Coinbase, Pantera, dYdX, Solayer, and more.

Whether you’re polishing your resume, preparing for a technical screen, or figuring out where you actually want to be — we’ve got your back. 👇

GPT-4.5 Can Now Replace That One Person in Your Group Project

A new study suggests that GPT-4.5 might’ve just passed the Turing test — at least by one definition.

Researchers ran a controlled experiment where participants held short conversations with both a human and an AI, then had to guess who was who. GPT-4.5 was mistaken for the human 73% of the time — more often than the actual humans it was compared to.

It’s not the final word on AI intelligence, but it’s a pretty fascinating milestone — and the first time a system has passed a classic Turing setup in a peer-reviewed setting.

Where this takes us next? TBD. Check out the paper below -

All we know is: if GPT-4.5 can convincingly impersonate a human….

Anyway, that’s enough existential crisis for now. I’m gonna go stress about summer internships.

Good luck to everyone applying — drink some water, keep hustling, touch some grass.

we’ll see you Thursday :)