The AI Doctor Will See You Now (Literally)

PLUS: Rethinking Agent business models, self-reasoning LLMs, and so much more.

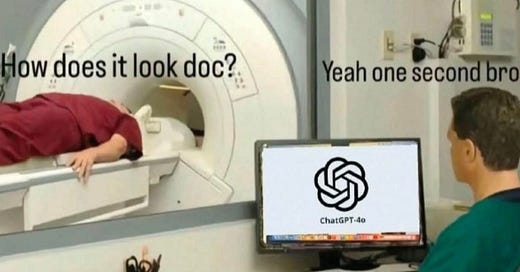

This week’s story comes courtesy of Google DeepMind: AMIE, the once-text-only AI doc, just got a multimodal brain transplant. It now requests your rash photos, reads your ECGs, and makes diagnostic decisions with the calm confidence of a sleep-deprived resident on espresso drip.

AMIE runs on a structured, three-phase state machine - History Taking → Diagnosis → Management — while constantly checking for gaps in its own knowledge. If it’s unsure? It asks. (“Yeah, I’m gonna need to see that weird mole, bro.”)

If that sounds like rocket science, that’s because it kind of is. Diagnostic agents like AMIE are operating at the bleeding edge of research, medicine, and AI reasoning. But don’t worry—there’s still plenty of room to build agents that don’t require an MD or FDA approval.

Healthcare might be the most complex frontier for agentic systems - but it's not the only one. And most of us aren’t deploying into clinics anyway. Other agents are quietly eating customer support, enterprise ops, and back-office workflows. This week, we dive into that layer: where agents are being deployed not to save lives, but to save time—and money. Bret Taylor (Sierra, ex-Facebook CTO) lays out how agent-based software is reshaping the business model stack, and why vertical tools that do the job are outpacing generic platforms that just talk about it.

And if you’re more into training than deployment, we’ve got a treat: a look at Absolute Zero, a new framework where agents generate their own tasks, solve them, and get smarter - without a single human label. Self-reasoning is emerging, and it’s weird in all the best ways.

Whether you're building, researching, or just trying to keep up - we've got layers to unpack today.

Let’s get into it.

The Outcome Era: How AI Agents Are Restructuring Software Itself

Bret Taylor's conversation reveals how someone who "rewrote Google Maps in a weekend" learned to stop being just an engineer and start solving real business problems. His journey from Facebook CTO to building Sierra offers a rare window into what actually works when turning AI into revenue.

1. The Market Has Three Layers - Only One Matters for Startups

Bret sees AI splitting into foundation models (capital-intensive, will consolidate like cloud providers), tools (vulnerable to foundation model companies), and applied AI/agents. His take: "I think there's been a little too much excitement around the models themselves... the value is taking this amazing technology and solving a valuable business problem." The opportunity isn't in competing with OpenAI—it's in building the AI agent that handles ADT's security alerts or SiriusXM's subscription changes.

2. Stop Selling Features, Start Replacing Jobs

Sierra doesn't charge for API calls or seats—they charge when their AI successfully resolves a customer issue without human intervention. "If you're selling antitrust review... you're providing something that very high-cost labor produced before." This shift from productivity enhancement to job completion changes the entire market size. As Bret notes, no pure-play SaaS company has hit trillion-dollar valuations, but selling outcomes might change that.

3. Your Engineering Excellence Won't Save You

Bret's most vulnerable moment came when Sheryl Sandberg told him he was failing as Facebook's CTO - not technically, but because he was trying to do everything himself. His advice: "Don't let something you're good at become who you are." Every startup stage needs different skills. Early on, product is everything. Later, it might be sales, operations, or partnerships. The hard part is recognizing when to stop coding and start listening to what ADT's head of operations actually needs.

4. Vertical Beats Horizontal Every Time

"I am fairly skeptical of horizontal AI platforms," Bret states plainly. The job of an AI agent for a telecom company versus a bank versus an insurance company is completely different. The companies providing immediate value around specific workflows will win. His rule: "The closer you get to solving a problem for a company, the more successful your business will be."

The takeaway isn't about architectures or pricing models - it's about a fundamental shift in how software gets built and sold. When AI can complete jobs rather than enhance productivity, the entire software industry restructures around outcomes rather than tools.

Absolute Zero and the Rise of Self-Taught Reasoners

What happens when a model not only solves tasks, but invents its own curriculum? That’s the vision behind Absolute Zero, a new paradigm from researchers at Tsinghua, BIGAI, and Penn State that challenges one of machine learning’s foundational assumptions: that intelligence depends on human-labeled data. Instead of relying on expert-curated prompts or answer keys, Absolute Zero Reasoner (AZR) trains itself via reinforced self-play—generating, solving, and learning from its own code-based reasoning tasks, all validated through a Python executor.

The core training loop is elegant but powerful: one model proposes tasks (problems with just the right level of difficulty), then attempts to solve them. Feedback comes purely from execution results—no ground-truth labels. Despite this, AZR not only generalizes out-of-distribution, it outperforms models trained on tens of thousands of hand-crafted examples. On standard math and code benchmarks, AZR-7B posted gains of +10.2 points over its base model. At 14B, the advantage grows to +13.2—revealing a clear trend: larger models benefit more from self-play, and AZR’s effectiveness scales with model capacity.

But AZR isn’t just getting better—it’s getting weirder in useful ways. Over the course of training, the models develop distinct behaviors aligned to each task type. In abduction tasks, they iterate inputs through trial-and-error until the output matches; in deduction, they step through code with structured reasoning; in induction, they check test cases systematically like a dev debugging a new algorithm. Strikingly, many models begin interleaving comments with generated code, using them as intermediate plans—mimicking ReAct-style reasoning, without being trained on it. These emergent behaviors hint at deeper cognitive patterns forming through the unsupervised curriculum, and suggest that self-play might be more than a training shortcut—it could be a path to agentic reasoning.

There’s nuance, of course. Gains were modest with weaker base models (e.g., Llama3.1-8B), and “uh-oh moments” emerged—chains of thought that raised safety concerns, especially in more exploratory scenarios. The authors are clear that oversight is still necessary, even in self-improving systems. But overall, AZR pushes the field toward a powerful new idea: that reasoning agents might not need labels—they need experience. And the better they get at defining their own challenges, the faster they evolve.

For the Students, By the Degens ⚡️

Skip the lecture, catch the alpha. Students from Harvard, Princeton, Stanford, MIT, Berkeley and more trust us to turn the chaos of frontier tech into sharp, digestible insights — every week, in under 5 minutes.

This is your go-to power-up for Web3, AI, and what’s next.

Job & Internship Opportunities

Product Designer Intern (UK) – Apply Here | Palantir

Research Scientist (Field) - Apply Here | Goodfire

Research Fellow (All Teams) - Apply Here | Goodfire

Website Engineer - Apply Here | ElevenLabs

Data Scientist & Engineer - Apply Here | ElevenLabs

Product Designer Intern (NY) - Apply Here | Palantir

Applied AI Engineer - Paris (Internship)– Apply Here | Mistral

Brand Designer - Apply here | Browserbase

A range of roles from YC Backed Humanloop - Apply Here

A range of roles from Composio - Apply Here

A range of roles from SylphAI - Apply Here

Partner Success Specialist - Apply Here | Cohere

Software Engineer Intern/Co-op (Fall 2025) - Apply Here | Cohere

GTM Associate - Apply Here | Sana

Sales Development Representative - Apply Here | Cresta

Brand Designer - Apply Here | Cresta

Product Engineer - Apply Here | Letta

Research Scientist - Apply Here | Letta

⚡️ Your Job Search, Optimized

We don’t just talk about building — we help you get in the room.

From protocol labs to VC firms, our students have landed roles at places like Coinbase, Pantera, dYdX, Solayer, and more.

Whether you’re polishing your resume, preparing for a technical screen, or figuring out where you actually want to be — we’ve got your back. 👇

Unsubscribe anytime - but if it’s not hitting, slide into our DMs. We’re building this with you, not for you.

The Agent UX Stack Starts Here

We’re closing out this week not with a lawsuit or a trillion-dollar restructure — but with something more useful: a cool new protocol you can build with today.

Meet AG-UI, an open protocol for connecting AI agents to real-world user interfaces — cleanly, consistently, and in real time.

AG-UI is a lightweight event-streaming protocol that acts as a translator between your agent backend (OpenAI, LangGraph, Ollama, custom code) and your frontend UI. It standardizes how text, tool calls, state updates, and lifecycle events move between the two — using a single HTTP request and a unified JSON event stream (or an optional binary channel if you’re feeling spicy).

It’s helpful because building user-facing agents is messy. You’ve got streaming tokens, function calls that need UI feedback, shared state that mutates over time, and no standard way to make it all work across frameworks. AG-UI fixes that. It gives you a stable contract for agent ↔ user interaction - no more cursed WebSockets, janky token hacks, or per-tool adapters.

If you're building anything agent-facing - dashboards, copilots, editors, assistants - this is the spec to try out. And if you're not building yet… maybe now’s a good time to start.

And with that — that’s it for this week’s AI issue. We’ll see you in the next one.